Operational Reviews

Facilitate operational review meetings with team, service and company operation metrics

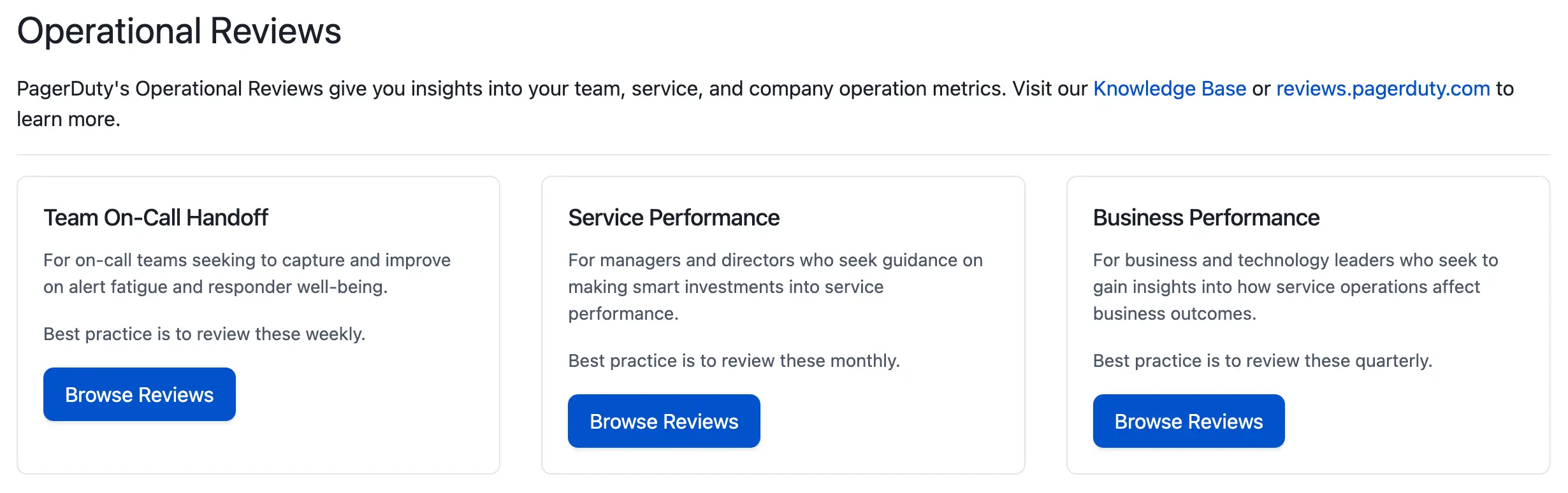

The Operational Reviews feature offers metrics for three different types of reviews, targeted at different levels of leadership in a digital business. These review types facilitate weekly, monthly and quarterly operational review meetings:

We designed these reviews to analyze past performance, and they are not meant to serve ad-hoc or real-time analytics needs. For in-depth information on the background of the Operational Reviews feature, please read our Reviews Guide.

You can view Operational Reviews in the web app at Analytics Operational Reviews.

Operational Reviews menu

Availability

The Operational Reviews feature is included on the following pricing plans:

- Business

- Digital Operations (legacy)

- Enterprise for Incident Management.

Please contact our Sales Team if you would like to upgrade your plan to access this feature.

Required User Permissions

All users, with the exception of Restricted Access and Limited Stakeholder roles, can view and manage Operational Reviews.

Foundational Concepts

Each review incorporates interruptions and major incidents.

Interruptions

Interruptions are when an on-call responder receives an SMS (text), mobile push or phone call notification and when an incident is sending out (within our metrics) a unique count of notifications. The same type of notification sent from various channels (SMS, push, phone call) to the same destination are counted as one interruption. Email notifications are excluded, as email is not considered an interrupting channel.

- Business Hours Interruptions (Color Signifier: Yellow): The count of interrupting notifications sent between 8am and 6pm Monday to Friday, in the user's local time.

- Off Hours Interruptions (Color Signifier: Orange): The count of interrupting notifications sent between 6pm and 10pm Monday to Friday or all day over the weekend, in the user's local time.

- Sleep Hours Interruptions (Color Signifier: Red): The count of interrupting notifications sent between 10pm and 8am, in the user's local time.

Major Incidents

We define a major incident as any high-priority incident that requires a coordinated response, often across multiple teams. They are typically highly noticeable by customers, so fixing the problem is of the greatest importance. Most organizations refer to major incidents as P1, P2, or SEV-1, SEV-2. In PagerDuty Analytics, we define major incidents as the top two levels of your priority settings, or if you add multiple responders and they acknowledge the incident. Anyone who accepts a request to join the incident, who acknowledges the incident, or who reopens it will be included in this count. In the Business Performance Review under Major Incident Details, we list these designations on the upper left hand side in pills that can say P1, P2, SEV-1, SEV-2 or MRI for Multiple Responders Involved.

Review Configuration

In the web app, navigate to Operational Reviews under Analytics Operational Reviews. When you first enable the Operational Reviews feature, reviews will automatically generate based on your account’s Team data, and you do not need to manually create them. If the Operational Reviews feature was just enabled, you may not see reviews until PagerDuty has analyzed your account’s performance data, which can take approximately one hour. If you do not see any reviews, ensure that you are a member of at least one Team so they can generate.

Note

Operational Reviews data will only create reviews for Teams that were present in the account upon enablement. At this time, any new Teams created after this point will not generate reviews.

Out of the box, each user will have a unique, default review based on their Team membership, and they can subscribe or unsubscribe to ones they have been assigned to. If you would like to view reviews for a Team that you are not on, you will need to join that Team or create a custom review that covers the Team with your desired data.

Default reviews generate on a set schedule:

- Team On-Call Handoff Reviews generate every Monday morning.

- Service Performance Reviews generate the first of every month.

- Business Performance Reviews generate the first of every calendar quarter.

- Custom reviews – those that you create for yourself – generate on the cadence set when they were created.

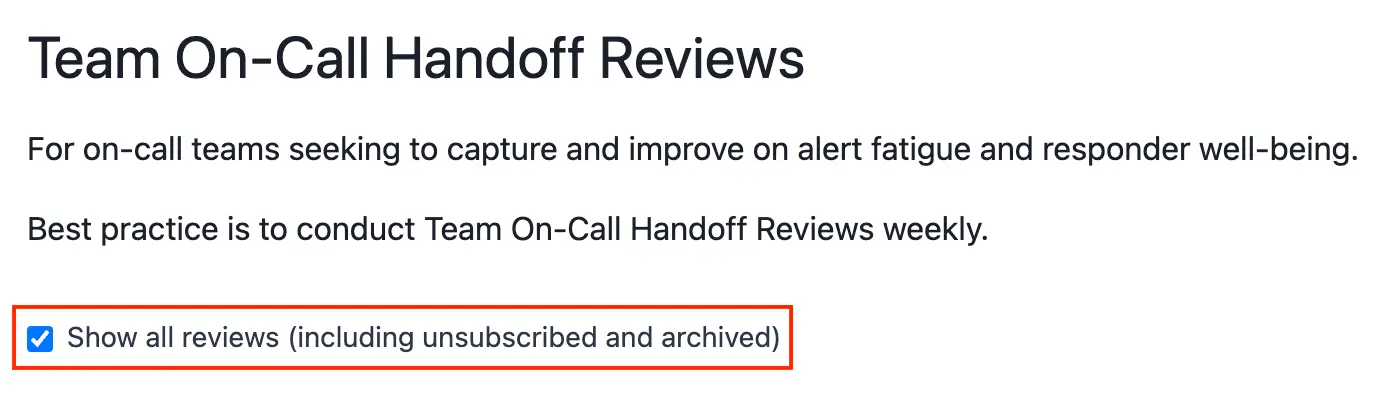

Show All Reviews

- Navigate to Analytics Operational Reviews and then click Browse Reviews under your desired review type.

- To view reviews that you are not currently subscribed to, check the Show all reviews (including unsubscribed and archived) checkbox.

Show all reviews checkbox

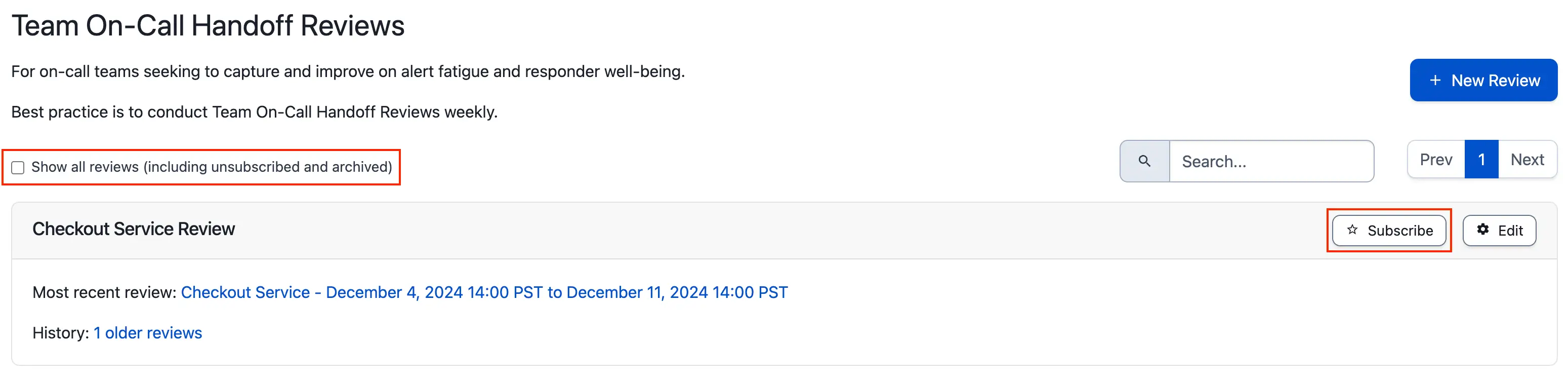

Subscribe or Unsubscribe From a Review

- Navigate to Analytics Operational Reviews and then click Browse Reviews under your desired review type.

- If you would like to subscribe to a review: Check the Show all reviews (including unsubscribed and archived) checkbox. Search for the desired review and click Subscribe on the right side.

Subscribe to a review

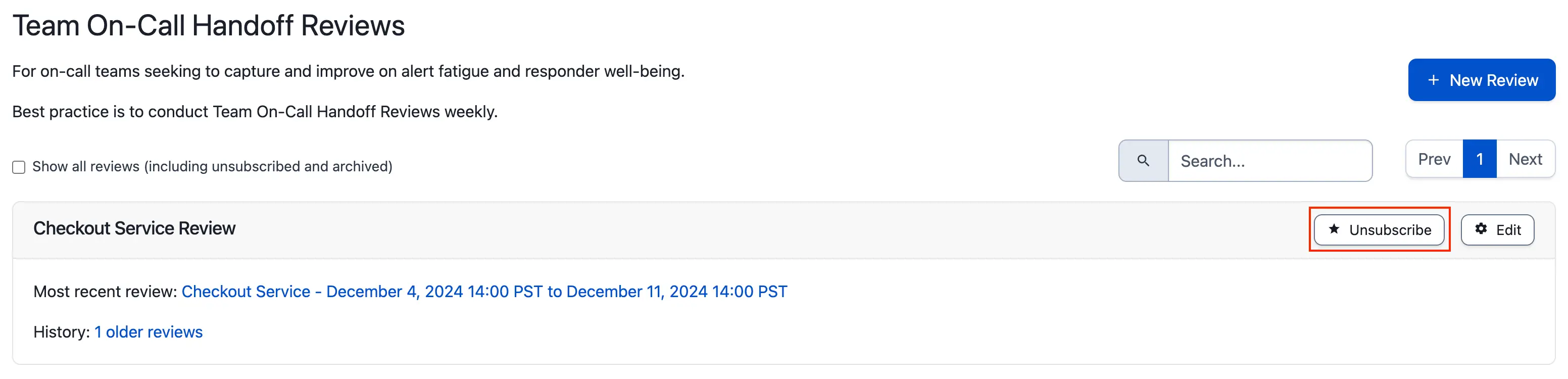

If you would like to unsubscribe from a review: Search for it and click Unsubscribe on the right side.

Unsubscribe from a review

Create a Custom Review

If you would like to generate reviews of multiple, often less-related Teams, you can create a custom review. Custom reviews will generate for the first time at the end of the time period you set when you created the review. For example, if you created a review on Tuesday that goes from Monday 8:00am through Monday 7:59am, you will have to wait until the upcoming Monday to see a review.

- Navigate to Analytics Operational Reviews click Browse Reviews under your the desired review type and click the New Review button.

- On the next screen, enter:

- Review Name: A meaningful name that reflects the intended purpose of your review meeting (e.g., if you need to have a review that incorporates data from multiple teams, you could enter “Customer Support, Data, Web Triage - Team On Call Handoff Review”).

- Team: Search and select the Team(s) you would like to review.

- Frequency (Team On-Call Handoff Reviews only): Select the amount of weeks between reviews, and the date and time when you would like the review to generate.

- Click Save to create your custom review.

Edit Reviews

Default Team On-Call Handoff Reviews allow you to edit the number of weeks, days of the week, and hour of day that they generate. Custom reviews of any type allow you to edit any aspect of the review that was customizable when created. Default Service Performance Review and Business Performance Reviews are not currently editable.

To edit a review:

- Navigate to Analytics Operational Reviews and click Browse Reviews under your desired review type.

- Search your desired review and click Edit on the right side.

- Edit the details available to you (please read summary of options above) and click Save.

Delete Team On-Call Handoff Reviews

Currently, you can only delete Team On-Call Handoff reviews.

Note

You cannot delete Service Performance and Business Performance reviews. However, clicking Unsubscribe will hide any unwanted reviews from your list.

To delete a Team On-Call Handoff review:

- Navigate to Analytics Operational Reviews and click Browse Reviews under the Team On-Call Handoff review type.

- Search your desired review and click Edit on the right side.

- Click Delete on the right and then click Delete Review in the prompt that appears.

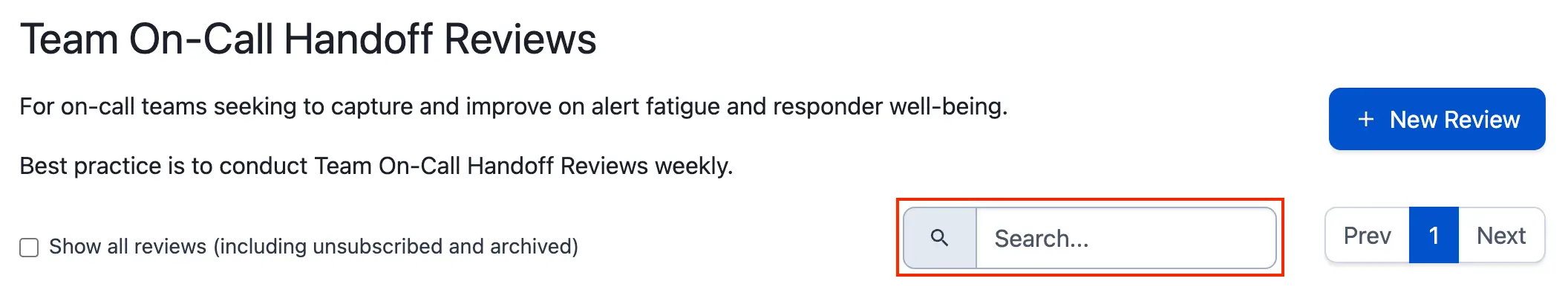

Search for a Review

Each list of Team On-Call Handoff, Service Performance and Business Performance reviews has a search capability. For users who have many reviews in their list, the search allows them to find specific reviews quickly. To access the review search, navigate to Analytics Operational Reviews select the name of the desired review type and search for the desired team(s) and/or review name(s).

Search for a review

Review Types

Last Report Comparison

For each review type, the sparklines compare the most recent review period to the four previous periods in order to show how the metrics are trending. To the left of each sparkline, you will see the metric for the current period. Below each sparkline, you will see the metric for the Last period and the percent change between the two periods.

Team On-Call Handoff Reviews

Team On-Call Handoff Reviews can help Teams capture and improve alert fatigue and responder well-being. The metrics focus on interruptions to responders during business hours, off hours and sleep hours, MTTA/MTTR, escalations, and the most frequent incidents. Team On-Call Handoff Reviews generate every Monday by default.

Metrics

Team Metrics:

- Major Incidents: Count of incidents on the Team’s technical services with high Priority (P1, P2) or Severity (Sev-1, Sev-2), and/or requiring the attention of more than one responder.

- High Urgency Incidents: Count of high urgency incidents within the time period designated for the review.

- High Urgency Incident MTTR: Average mean time to resolve (MTTR) for high urgency incidents.

- Off Hours Interruptions: The count of interrupting notifications sent between 6pm and 10pm Monday to Friday, or all day over the weekend, in the user's local time.

- Escalations: Total count of escalations which occurred in the Team/Teams' weekly report, including both manual and time-out escalations.

- High Urgency Incident MTTA: The mean time to acknowledge (MTTA) for high urgency incidents.

- Sleep Hours Interruptions: The count of interrupting notifications sent between 10pm and 8am, in the user's local time.

- Loudest Service: The service that sends out the highest number of unique interrupting notifications.

- Loudest Incident: A singular incident with the highest number of interrupting notifications sent out until it has been resolved.

- Interruptions By Service: A breakdown of interruptions per service, displayed in a pie chart.

Individual Metrics:

- Company Averages This Period: The company average shows the average interruption summary among incident responders for a specified time period. We take all individuals who were interrupted at least once, use their time zones to determine when interruptions took place, and divide the totals by the total number of interrupted individuals for the time period. We then use this data and surface comparisons on the Team individual level to highlight whether any individual member experienced below or above average operational load relative to the rest of their peers.

- Average Hours Interrupted: The number of hours in a day where a user receives one or more interrupting notifications. The maximum is 24 per day.

- Average Total Interruptions: The average total interruptions per user in the time range designated by the review.

- # Responders Interrupted This Period: Number of responders interrupted in the period designated by the review. Each user listed will also have a Current Vibe:

- Sad face: Any responder who was interrupted once during sleep hours, twice during off hours or more than five times in total will have a Sad face.

- Neutral face: Any responder who has one off hours interruption and five or less business hours interruptions will have a Neutral face.

- Happy face: Any responder who received no off hours or sleep hours interruptions, and has less than 5 total interruptions will have a Happy face

- Most Frequent Incidents: Reoccurring and interrupting incidents of identical description. Each metric will include the incident Description and total count of Interruptions.

- Incidents from this report: A table of incidents included in this report’s data.

Service Performance Reviews

Service Performance reviews are designed to guide smart investments into service performance. The metrics focus on aggregate measurements, such as time without major incidents, MTTR for major and high urgency incidents, and detailing the breakdown of the incidents and services that may be worth the most attention and investment. Service Performance Reviews generate on the 1st of every month by default.

Metrics

- Time W/O Major Incident: The total amount of time, during the reporting period, without an open major incident. Concurrent major incidents are counted together as a single measure.

- Time W/O High Urg. Incident: The calculated net duration of high-urgency incidents in a month (removing any overlapping time) and the calculated percentage of time without high-urgency incidents.

- Major Incident MTTR: Average mean time to resolve (MTTR) for incidents on the service with Priority (P1, P2) or Severity (Sev-1, Sev-2), and/or requiring the attention of more than one responder.

- High Urgency Incident MTTR: Average mean time to resolve (MTTR) for high urgency incidents on the service.

- Major Incidents Count: Count of major incidents.

- High Urgency Count: Count of high urgency incidents.

- Longest Major Incident TTR: The longest time to resolve (TTR) on a major incident.

- Longest Urgent Incident TTR: The longest time to resolve (TTR) on a high urgency incident.

- Longest Incident Duration (High Urg.): The longest total duration from trigger to resolution on a high urgency incident.

- Loudest Services: The technical services that had the highest number of interrupting notifications.

- Most Frequent Incidents: Reoccurring and interrupting incidents of identical description.

- Business Service At Risk: A measure of service stability based on the amount and duration of incidents of your technical services mapped into the business services in your system. There are two ways of calculating the business service at risk:

- One is to identify the business service with the longest single major incident. This is calculated by adding up the overlapping total major incident durations on the technical services that support a business service (i.e. flattening the time).

- The second is to identify the business service with the highest major incident volume. This is calculated by adding up the major incident count on the technical services that support a business service.

- Worst Performing Technical Services: Services with the largest number of incidents. Incident count includes both high and low-urgency. Business Service affiliations and Team associations that the technical service may have will also appear here.

Business Performance Reviews

Business Performance Reviews help business and technology leaders gain insight into how service operations affect business outcomes. The metrics focus on the company cost of operating services (hours lost responding, cost of responder time), the impact on customers (aggregate major incidents duration), and a summary of high-impact incidents from the quarter. Business Outcome Reviews generate every calendar quarter (April 1st, July 1st, Oct 1st, and Jan 1st) by default.

Metrics

- Time W/O Major Incident: The total amount of time, during the reporting period, without an open major incident. Concurrent major incidents are counted together as a single measure.

- Est. Response Cost: The estimated cost, in US dollars, that your company spent on employee incident response. This is a fixed cost calculation of $50 per hour.

- Responder Cost in Hours: The actual cost, in people/labor hours, that your company spent on employee response to major incidents (i.e., each engaged responder's time from acknowledgement to resolution). This is also known as your responder burn rate.

- Major Incidents: Count of incidents on the Team’s technical services with Priority (P1, P2) or Severity (Sev-1, Sev-2), and/or requiring the attention of more than one responder.

- Major Incident MTTR: The mean time to resolve (MTTR) for major incidents.

- Most Time in Response: The top three teams that were affected by major incidents along with the number of major incidents, cost in hours, and the percentage of time spent on unplanned effort that the incidents had caused.

- Business Services At Risk: A measure of service stability based on the amount and duration of incidents of your technical services mapped into the business services in your system. There are two ways of calculating the business service at risk:

- One is to identify the business service with the longest single major incident. This is calculated by adding up the overlapping total major incident durations on the technical services that support a business service (i.e. flattening the time).

- The second is to identify the business service with the highest major incident volume. This is calculated by adding up the major incident count on the technical services that support a business service.

- Technical Services Hit List: A list of three services, in order of worst affected, that need the most attention based on frequency of major incidents. We determine the three worst services by the Time w/o Major Incident metric, with the lowest times shown.

- Major Incident Details: View the Est. Response Cost, Duration, Technical Service and Business Services Affected for each major incident.

FAQ

I just had the Operational Reviews feature enabled, why don’t I see any reviews yet?

After enablement, it can take approximately one hour to analyze your account’s data. Please ensure you are assigned to at least one Team so that Team reviews can generate.

Why don't I see all my Teams on my Team On-Call Handoff Reviews?

After the feature is enabled, all existing Teams will have a review generated. However, Teams created after this will not have reviews added automatically. To generate reviews for new Teams, you can click the New Review button.

How are my default reviews determined, and how do I change what reviews I can see?

Out of the box, your reviews will generate based on the Teams you are on. If you would like to view reviews for a Team that you are not on, you will need to join that Team or create a custom review that covers the Team with your desired data.

How do Operational Reviews deal with different time zones?

Time zones are utilized in three ways:

- To determine business, off, and sleep hours.

- To display the time of an incident.

- And to determine the date and time range that a review covers.

When determining if an interruption happened during business, off, or sleep hours, PagerDuty uses the time zone set on the user’s profile. If a user has not set their time zone, the user profile will inherit the account time zone.

When displaying the details of a specific incident in one of the reviews, PagerDuty will display time based on the user’s time zone. If a user has not set their time zone, the user profile will inherit the account timezone.

When creating a review, users will need to select the time range based on the displayed account time zone. Default reviews generate on weekly, monthly, and quarterly cadences based on the account time zone.

Are incident times based on when they were created or when they were resolved?

Results include only the incidents created within the selected timeframe.

Updated 10 months ago